The long-term fetch and place knowledge base contains activity episodes of an autonomous robot setting a table. The challenges in this scenario is that the robot has to perform vaguely formulated tasks such as “put the items needed on the table and arrange them in the appropriate way”. The robot reasons about where to find objects, where to stand to pick them up, and how to pick them up and handle them.

Long-term fetch and place tasks in partially structured environments depict autonomous mobile robot manipulation platforms in challenging, dynamic environments. From recorded datasets of such repeatedly performed, possibly mundane actions, common structures can be identified and crucial parameters for everyday activities can be extracted. Having knowledge of the general characteristics of how a task should be performed and what to expect when performing it, greatly increases the reasoning capabilities and task awareness of an autonomous agent, raising its chances for success.

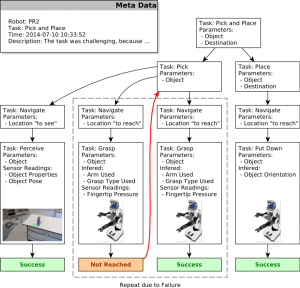

The data describes a robot agent that is tasked with acquiring objects from vaguely described locations (“on table“), and transport them over to target locations, described in a similar vague manner (“next to dinner plate“). All tasks are semantically described in-depth, containing information about which actions were performed (perception, navigation, manipulation) and what their outcome was.

The physical state of the robot agent is tracked over time and stored for each time point. Perception requests and their subsequent results are available, allowing reasoning about which objects were detected where, and what their exact properties were (as reported by the perception system at that time). With complete information about task success and failure, a comprehensive analysis of why a task failed and what task resolved its failure can be conducted.

The data contained in these datasets include: