Safe human-robot activity – Details

WARNING: Deprecated by michael and patrick – michael wants the detail section in the overview page (16.10.2014)

The Safe Human-Robot Activity dataset contains information from an experiment in which a robot shares its workspace with a human co-worker. It contains poses of perceived human body parts, estimated external forces acting on the robot arms, the robot joint state, and semantic descriptions of performed actions and safety reactions. We believe that recalling safety-related events through such a knowledge base will make robots safety-aware.

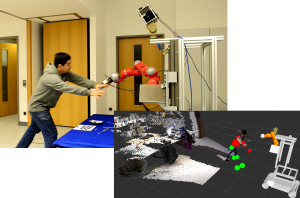

Visualization of a safety-critical event in the knowledge base.

Description of the data

This dataset contains the following data:

- Semantic memory (OWL log) of all performed actions, and their parametrizations and outcomes

- Physical transformation (TF) data of all perceived links of the human co-worker

- Head-camera pictures taken at key moments (before and after perceiving and manipulating instruments)

- Meta-data describing the hardware used, the experiment conducted, a short description, and the experiment’s length

- Complete robot joint state including estimated external torques acting on the arms

- Detected safety events, i.e. collision types, and automated low-level reactions, i.e. soft stop, hard stop, zero-g, etc.

Acknowledgements

The development of the safety-aware knowledge base has received funding from the EU FP7 SAPHARI project: